An Amputee with an AI-Powered Hand! 🦾

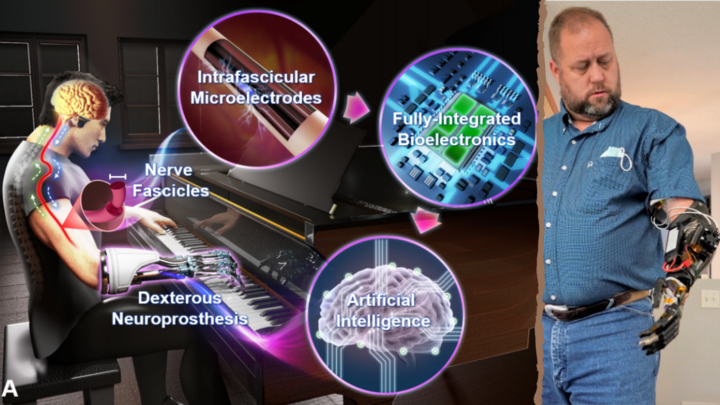

With this AI-powered nerve interface, the amputee can control a neuroprosthetic hand with life-like dexterity and intuitiveness.

(optional) You can watch the video about this article at the end!

In this article, I will talk about a randomly picked application of transformers from the 600 new papers published this week, adding nothing much to the field but improving the accuracy by 0.01% on one benchmark by tweaking some parameters.

I hope you are not too excited about this introduction because that was just to mess with transformers’ recent popularity. Of course, they are awesome and super useful in many cases, and most researchers are focusing on them, but other things exist in AI that are as exciting if not more! You can be sure I will cover exciting advancements of the transformers’ architecture applied to NLP, computer vision, or other fields, as I think it is very promising, but covering these new papers making slight modifications to them is not as interesting to me.

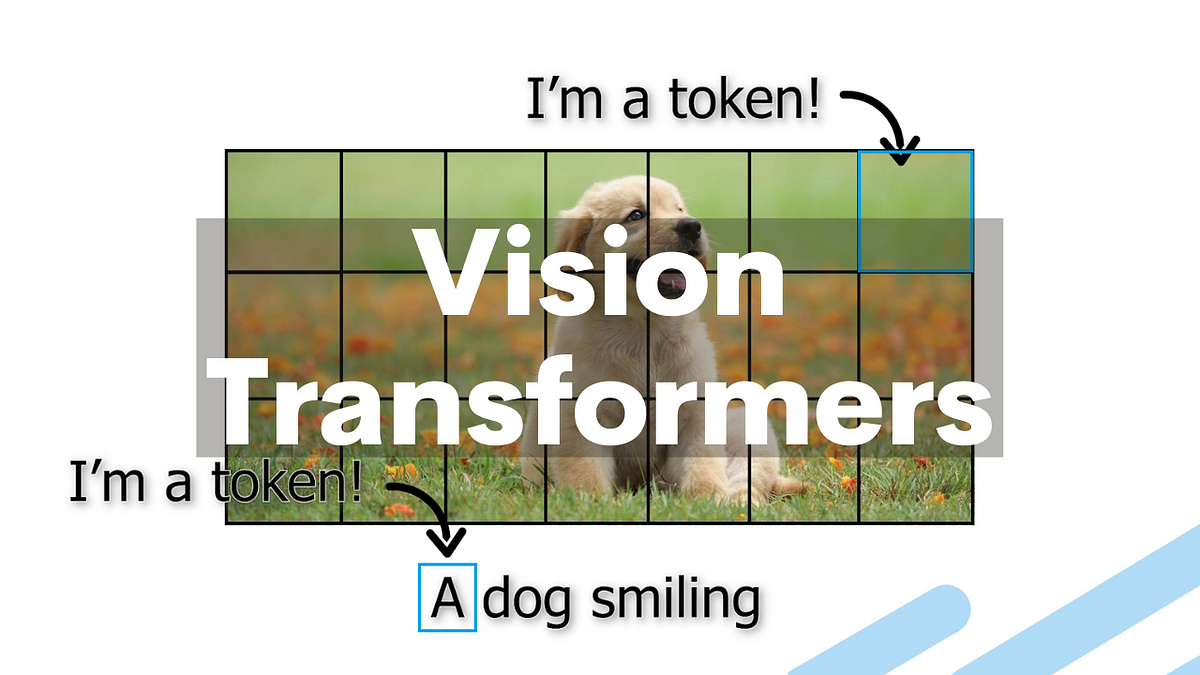

Just as an example, here are a couple of papers shared in march applying transformers to image classification. And since they are all quite similar and I already covered one of them (below), I think it is enough to have an overview of the current state of transformers in computer vision.

Now let’s enter the real subject of this article! Which is nothing related to transformers or even GANs in that case, no hot words at all except maybe ‘Cyberpunk’, and yet, it is one of the coolest application of AI I’ve seen in a while! It attacks a real-world problem and can change the lives of many people. Of course, it is less glamour than changing your face into an anime character or a cartoon, but it is much more useful.

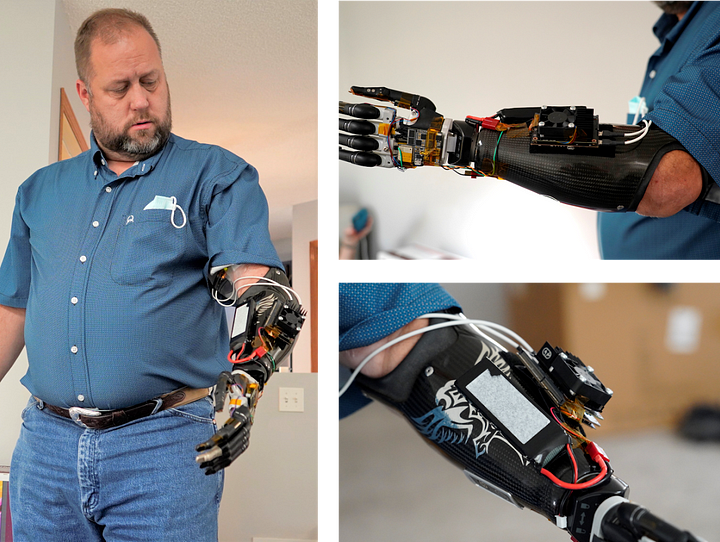

I present you the “Portable, Self-Contained Neuroprosthetic Hand with Deep Learning-Based Finger Control” by Nguyen, Drealan et al. Or, in the words of one of the authors, the “Cyberpunk” arm.

Before diving into it, I just wanted to remind you of the free NVIDIA GTC event happening next week with many exciting news related to AI and the Deep Learning Institute giveaway I am running if you subscribe to my newsletter. If you are interested, I talked about this giveaway with more details in my previous video, see below.

Now, let’s jump right into this unique and amazing new paper.

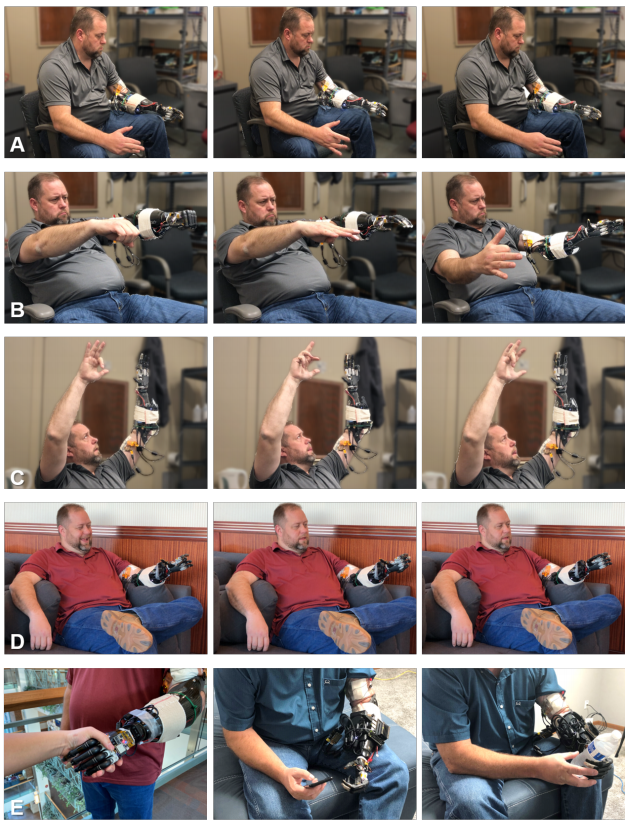

This new paper applies deep learning to a neuroprosthetic hand to allow real-time control of individual finger movements, all done directly within the arm itself! With as little as 50 to 120 milliseconds of latency and 95 to 99% accuracy, an arm amputee who has lost his hand for 14 years can move its cyborg fingers just like a normal hand! This works shows that the deployment of deep neural network applications embedded directly on wearable biomedical devices is first possible but also extremely powerful!

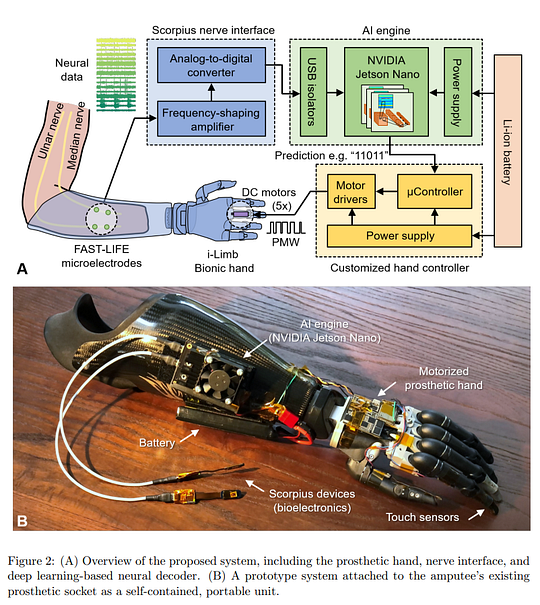

Here, deep learning is used to process and decode nerve data acquired from the amputee to obtain dexterous finger movements. The problem here is that in order to be low-latency, this deep learning model has to be on a portable device with much lower computational power than our GPUs. Fortunately, there has been recent development of compact hardware for deep learning uses to fix this issue.

In this case, they used the NVIDIA Jetson Nano module specifically designed to deploy AI in autonomous applications. It allowed the use of GPUs and powerful libraries like TensorFlow and PyTorch inside the arm itself. As they state, “This offers the most appropriate trade-off among size, power, and performance for our neural decoder implementation.” Which was the goal of this paper: to address the challenge of efficiently deploying deep learning neural decoders on a portable device used in real-life applications toward long-term clinical uses.

Of course, there are a lot of technical details that I will not enter into as I am not an expert, like how the nerve fibres and bioelectronics connect together, the microchips designs that allow this simultaneous neural recording and stimulation, or the implementation of software and hardware to support this real-time motor decoding system. You can read a great explanation of these in their associated paper if you’d like to learn more about it, they are all linked in the references. But let’s dive a little more into the deep learning side of this insane creation. Here, their innovation lay into optimizing the deep learning motor decoding to reduce as much as possible the computational complexity into this Jetson Nano platform.

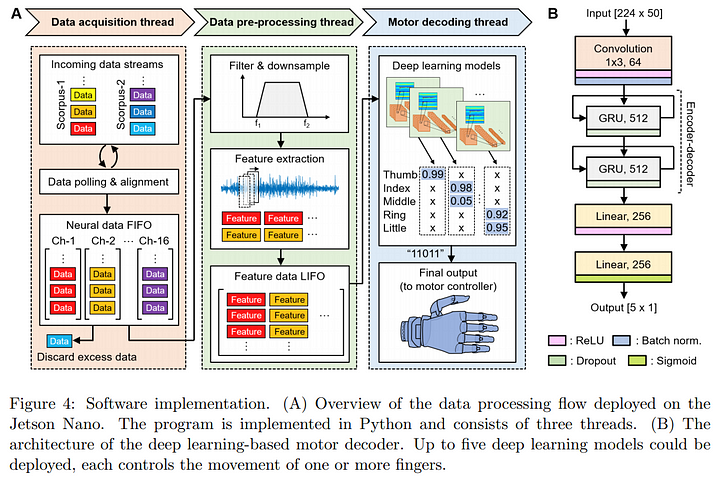

This image shows an overview of the data processing flow on the Jetson Nano. At first, the data, in the form of peripheral nerve signals from the amputee’s arm, is sent into the platform. Then, it is pre-processed. This step is crucial to cut raw input neural data into trials and extract their main features in the temporal domain before feeding to the models. This pre-processed data correspond to the main features of 1 second of past neural data from the amputee cleaned from noise sources. Then, this processed data is sent into the deep learning model to have a final output controlling each finger’s movement. Note that there are five outputs, one for each finger.

To quickly go over the model they used, as you can see, it starts with a convolutional layer. This is used to identify different representations of data input. In this case, you can see the 64, meaning that there are 64 convolutions made using different filters, so 64 different representations. These filters are the network parameters learned during training to correctly control the hand when finally deployed. Then, we know that time is very important in this case since we want fluid movements of the fingers, so they opted for gated recurrent units, or GRU, to represent this time-dependency aspect when decoding the data. GRUs will allow the model to understand what the hand was doing in the past second (what is first encoded) and what it needs to do next (what is decoded). To stay simple, GRUs are just an improved version of recurrent neural networks or RNNs. Solving computational problems RNNs had with long inputs by adding gates to keep only the relevant information of past input in the recurrent process instead of washing out the new input every single time. It is basically allowing the network to decide what information should be passed to the output. As in recurrent neural networks, the one-second data here in the form of 512 features is processed iteratively using the repeated GRU blocks. Each GRU block receives the input at the current step and the previous output to produce the following output. We can see GRUs as an optimization of the “basic” recurrent neural network architecture. Finally, this decoded information is sent to linear layers, basically just propagating the information and condensing it into probabilities for each individual finger.

They studied many different architectures, as you can read in their paper, but this is the most computationally effective model they could make, yielding incredible accuracy of over 95%.

Now that we have a good idea of how the model works and know that it is accurate, some questions are still left. Such as what does the person using it feels about it? Does it feel real? Does it work? Etc. In short, is this similar to a real arm?

As the patient himself said:

[Patient] I feel like once this thing is fine-tuned as finished products that are out there. It will be more life-like functions to be able to do everyday tasks without thinking of what positions the hand is in or what mode I have the hand programmed in. It’s just like if I want to each and pick up something, I just reach and pick up something. […] Knowing that it’s just like my [able] hand [for] everyday functions. I think we’ll get there. I really do!

To me, these are the most incredible applications that we can work on with AI.

It directly helps real people improve their lives’ quality, and there’s nothing better than that! I hope you enjoyed this short read, and you can watch the video version to see more examples of this insane cyborg hand moving!

Thank you for reading, and just as he said in the video above, I will say the same about AI in general: “This is crazy cool”!

If you like my work and want to stay up-to-date with AI, you should definitely follow me on my other social media accounts (LinkedIn, Twitter) and subscribe to my weekly AI newsletter!

To support me:

- The best way to support me is by following me here on Medium or subscribe to my channel on YouTube if you like the video format.

- Support my work on Patreon

- Join our Discord community: Learn AI Together and share your projects, papers, best courses, find Kaggle teammates, and much more!

References

[1] Nguyen & Drealan et al. (2021) A Portable, Self-Contained Neuroprosthetic Hand with Deep Learning-Based Finger Control: https://arxiv.org/abs/2103.13452

[2]. Luu & Nguyen et al. (2021) Deep Learning-Based Approaches for Decoding Motor Intent from Peripheral Nerve Signals: https://www.researchgate.net/publication/349448928_Deep_Learning-Based_Approaches_for_Decoding_Motor_Intent_from_Peripheral_Nerve_Signals

[3]. Nguyen et al. (2021) Redundant Crossfire: A Technique to Achieve Super-Resolution in Neurostimulator Design by Exploiting Transistor Mismatch: https://experts.umn.edu/en/publications/redundant-crossfire-a-technique-to-achieve-super-resolution-in-ne

[4]. Nguyen & Xu et al. (2020) A Bioelectric Neural Interface Towards Intuitive Prosthetic Control for Amputees: https://www.biorxiv.org/content/10.1101/2020.09.17.301663v1.full