NVIDIA EditGAN: Image Editing with Full Control From Sketches

Control any feature from quick drafts, and it will only edit what you want keeping the rest of the image the same! SOTA Image Editing from sketches model by NVIDIA, MIT, UofT.

Watch the video!

Have you ever dreamed of being able to edit any part of a picture with quick sketches or suggestions? Changing specific features like the eyes, eyebrows, or even the wheels of your car? Well, it is not only possible, but it has never been easier than now with this new model called EditGAN, and the results are really impressive! You can basically improve, or meme-ify, any pictures super quickly. Indeed, you can control whatever feature you want from quick drafts, and it will only edit the modifications keeping the rest of the image the same. Control has been sought and extremely challenging to obtain with image synthesis and image editing AI models like GANs.

As we said, this fantastic new paper from NVIDIA, the University of Toronto, and MIT allows you to edit any picture with superb control over specific features from sketch inputs. Typically, controlling specific features requires huge datasets and experts to know which features to change within the model to have the desired output image with only the wanted features changed. Instead, EditGAN learns through only a handful of examples of labeled examples to match segmentations to images, allowing you to edit the images with segmentation, or in other words, with quick sketches. It preserves the full image quality while allowing a level of detail and freedom never achieved before. This is such a great jump forward, but what’s even cooler is how they achieved that, so let’s dive a bit deeper into their method!

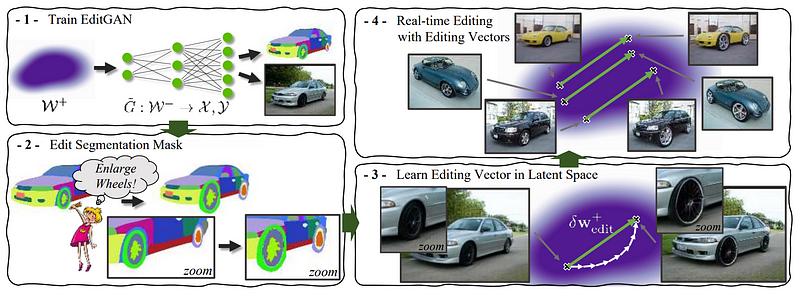

First, the model uses StyleGAN2 to generate images, which is the best image generation model available at the time of the publication and is widely used in research. I won’t dive into the details of this model since I already covered it in numerous articles with different applications if you’d like to learn more about it. Instead, I will assume you have a basic knowledge of what StyleGAN2 does: take an image, encode it into a condensed sub-space, and use a type of model called a generator to transform this encoded subspace into another image. This also works using directly encoded information instead of encoding an image to obtain this information. What’s important here is the generator.

As I said, it will take information from a sub-space, often referred to as latent space, where we have a lot of information about our image and its features, but this space is multidimensional, and we can hardly visualize it. The challenge is to identify which part of this sub-space is responsible for reconstructing which feature in the image. This is where EditGAN comes into play, not only telling you which part of the sub-space does what but also allowing you to edit them automatically using another input: a sketch that you can easily draw. Indeed, it will encode your image or simply take a specific latent code and generate both the segmentation map of the picture and the picture itself. This means that both the segmentation and images are in the same sub-space by training a model to do that, and it allows for the control of only the desired features without you having to do anything else as you simply need to change the segmentation image and the other will follow. The training will only be on this new segmentation generation, and the StyleGAN generator will stay fixed for the original image. This will allow the model to understand and link the segmentations to the same sub-space needed for the generator to reconstruct the image. Then, if trained correctly, you can simply edit this segmentation, and it will change the image accordingly!

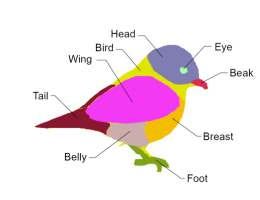

EditGAN will basically assign each pixel of your image to a specific class, such as head, ear, eye, etc., and control these classes independently using masks covering the pixels of other classes within the latent space.

So each pixel will have its label, and EditGAN will decide which label to edit instead of which pixel directly in the latent space and reconstruct the image modifying only the editing region. And voilà! By connecting a generated image with a segmentation map, EditGAN allows you to edit this map as you wish and apply these modifications to the image, creating a new version!

Of course, after training with these examples, it works with unseen images.

And like all GANs, the results are limited to the kind of images it was trained with, so you cannot use this on images of cats if you trained it with cars. Still, it is quite impressive, and I love how researchers try to provide ways to play with GANs intuitively, like using sketches instead of parameters. The code isn’t available for the moment, but it will be available soon, and I’m excited to try it out! This was just an overview of this amazing new model, and I would strongly invite you to read their paper for a deeper technical understanding.

Let me know what you think, and I hope you’ve enjoyed this article as much as I enjoyed learning about this new model! Watch the video to see more results!

AI ethics segment by Martina Todaro

«Just recently, a new law in Norway makes it illegal for advertisers and social media influencers to share retouched promotional photos online when not disclosed. The amendment requires disclosures for edits made after the image was taken and before, such as Snapchat and Instagram filters that modify one's appearance. According to Vice, examples of edits that people who are being paid for pictures are required to label include "enlarged lips, narrowed waists, and exaggerated muscles," among other things [1].» Can we predict if it will be a norm in other countries too in the future?

I remember when in the early 2000s, photo retouching was a graphic designer affair. It was an investment only companies and professionals could afford. The task has then become easier for everyone with the help of Artificial Intelligence. Thanks to Nvidia and several other Big Tech companies, retouching pictures is now so easy, diffused and socially accepted that people do not even question whether it is truly beneficial or not. Like many others, it is a free service, why not use it?

In a consensus-based network society, where drawing attention is crucial and being interesting is valuable, individuals (not only influencers) have strong incentives in editing their images: the market is thriving.

Of course, being the practice so diffused and accepted, it should also be quite normal to expect photo-retouched images from others. But it seems not the case. It has been shown how the unrealistic beauty standards imposed by social media have a negative influence on users' self-esteem.

Consequences are eating disorders, mental health issues, and suicidal thoughts among the young [2].

The trade-off is manifestly part of the broader "social dilemma": there is misalignment between the interests of the group and the incentives of individuals.

That is why, in my opinion, Norway is just leading the march, many other institutions must (and probably will) take action on the issue. The UK has already moved, Europe will most likely follow.

My suggestion: if you advertise your work and use retouched human images, don't wait for a regulation that imposes you to declare it. Everybody knows, get an edge on your competitors by choosing to be an ethical company or professional. It is a small step but it can mean a lot for the next generations.

- AI Ethics segment by Martina Todaro

Thank you once again to Weights & Biases for sponsoring the video and article and to you that is still reading! See you next week with a very special and exciting video about a subject I love!

If you like my work and want to stay up-to-date with AI, you should definitely follow me on my other social media accounts (LinkedIn, Twitter) and subscribe to my weekly AI newsletter!

To support me:

- The best way to support me is by being a member of this website or subscribing to my channel on YouTube if you like the video format.

- Follow me here or on medium

- Want to get into AI or improve your skills, read this!

References

- Ling, H., Kreis, K., Li, D., Kim, S.W., Torralba, A. and Fidler, S., 2021, May. EditGAN: High-Precision Semantic Image Editing. In Thirty-Fifth Conference on Neural Information Processing Systems.

- Code and interactive tool (arriving soon): https://nv-tlabs.github.io/editGAN/