Biologically-inspired Neural Networks for Self-Driving Cars

Imitating the nematode's nervous system to process information efficiently, this new intelligent system is more robust, more interpretable, and faster to train than current deep neural network architectures with millions of parameters.

Watch more in the video

Deep Neural Networks And Other Approaches

Researchers are always looking for new ways to build intelligent models. We all know that really deep supervised models work great when we have sufficient data to train them, but one of the hardest things to do is to generalize well and do it efficiently. We can always go deeper, but it has a high computation cost. So as you may already be thinking, there must be another way to make machines intelligent, needing less data or at least fewer layers in our networks.

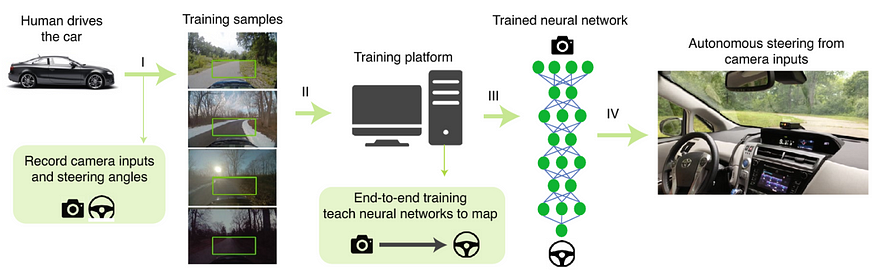

One of the most complicated tasks that machine learning researchers and engineers are currently working on is self-driving cars. This is a task where every option needs to be covered, and completely stable, to be able to deploy it on our roads. This process of training a self-driving car typically requires many training examples from real humans as well as a really deep neural network able to understand these data and reproduce the human behaviors in any situation.

Researchers from IST Austria and MIT have successfully trained a self-driving car using a new artificial intelligence system based on the brains of tiny animals, such as threadworms [1]. They achieved that with only a few neurons able to control the self-driving car, compared to the millions of neurons needed by the popular deep neural networks such as Inceptions, Resnets, or VGG. Their network was able to completely control a car using only 75 000 parameters, composed of 19 control neurons, rather than millions!

The Brain-Inspired Intelligent System

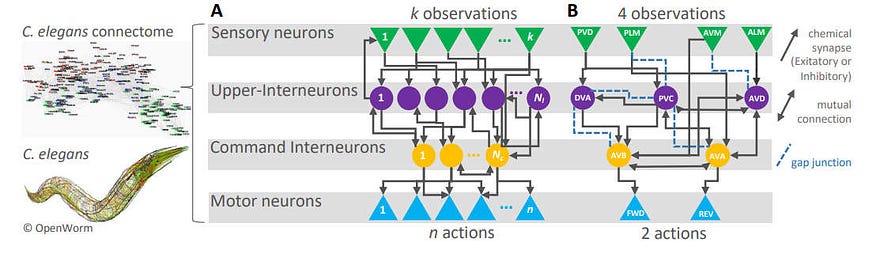

Since it is so small, it doesn’t have to be a “black box” like the deep models where we do not completely understand what’s going on at each stage of the network. Indeed, it can be understood by humans. As Professor Radu Grosu, head of the research group says: “The nematode C. elegans, for example, lives its life with an amazingly small number of neurons, and still shows interesting behavioral patterns.

This is due to the efficient and harmonious way the nematode’s nervous system processes information”. Nematode’s nervous system proves that there is still room for improvement for deep learning models. If nematodes can show interesting behavior while having this extremely small number of neurons after evolving to this near-optimal nervous system structure, we definitely can reproduce this on a machine, too. This neural system allows the nematodes to perform locomotion, motor control, and navigation, which is exactly what we aim for in applications like autonomous driving.

Following this neural system, they “developed new mathematical models of neurons and synapses” called “liquid time constant” or LTC neurons, as Professor Thomas Henzinger said.

One way to make the network simpler was to make it sparse. Meaning that not every cell is connected to every other cell. When a cell is activated, the others are not, which reduces the computation time since all the deactivated cells will not send any output (or a 0 output, which is extremely faster to compute). They also changed the way each cell work. As Dr. Ramin Hasani says, “The processing of the signals within the individual cells follows different mathematical principles than previous deep learning models”.

IST Austria And MIT’s New Intelligent System — NCPs

Let’s now enter a little deeper into how this new system works.

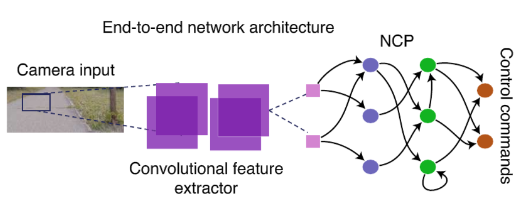

It consists of two parts. At first, there’s a compact convolutional neural network, which is used to extract structural features from the pixels of the input images. Using such information, the network decides which part of the image is important or interesting and passes only this part of the images to the second system.

Which they called a “control system” that steers the vehicle using decisions made by a set of biologically-inspired neurons. This control part is also called neural circuit policy, or NCP. Basically, it translates the data from the compact convolutional model outputs to only 19 neurons in an RNN structure inspired by the nematode’s nervous system controlling the vehicle and allowing it to stay into the lanes. Following the architecture shown above. You can find more details about the implementations of these NCPs networks in their paper or the clear guide they made on their GitHub [2].

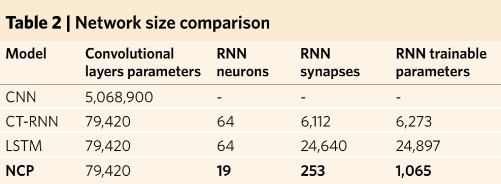

This is where the biggest reduction in parameters happens. Mathias Lechner explains that “NCPs are up to 3 orders of magnitude smaller than what would have been possible with previous state-of-the-art models” as you can see in table 2 shown below. Both of these systems are trained simultaneously and work together to create this self-driving car.

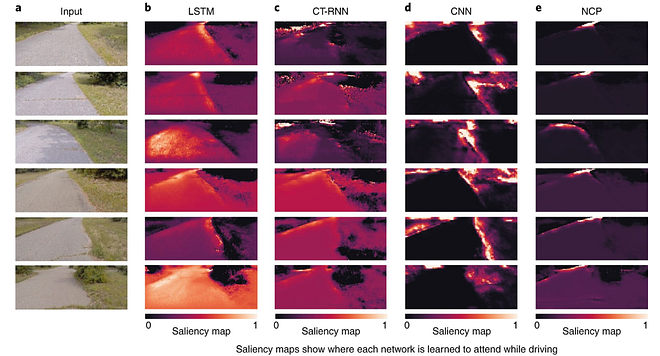

Being so small, they were able to see where the system was focusing its attention on the images fed. They discovered that having such a small network extracting the most important part of the picture made the few decision neurons focus exclusively on the curbside and the horizon. Which is a unique behavior among artificial intelligence systems that are currently analyzing every single detail of an image, using way too much information than needed.

Just take a second to look at how little information is sent into the NCP network compared to other types of networks. Just by looking at this image, we can see that it is clearly more efficient and faster to compute than current approaches.

Plus, while noise is a big problem for current approaches, such as rain or snow in lane-keeping applications, their NCP system demonstrated strong resistance to input artifacts because of its architecture and novel neural model keeping their attention on the road horizon even if the input camera is noisy, as you can see in the short video below.

Conclusion

Their novel approach is more robust, faster to train due to its small size, and more interpretable since it enables clear visualization of the activity inside the neural network. It’s a new generation of deep models and a huge step in the field of artificial intelligence and brings new perspectives by following the biological nervous systems.

As it is not already impressive enough, they also made the code publicly available. They even created complete tutorials on how to create/import their new NCP networks using the LTC neurons [3] and how to stack NCP with other types of layers [4]. Everything is available through their GitHub.

Of course, this was a simple overview of this new paper. I strongly recommend reading the paper linked below for more information.

Thank you for reading!

If you like my work and want to stay up-to-date with AI, you should definitely follow me on my other social media accounts (LinkedIn, Twitter) and subscribe to my weekly AI newsletter!

To support me:

- The best way to support me is by being a member of this website or subscribe to my channel on YouTube if you like the video format.

- Support my work financially on Patreon.

- Follow me here on my blog or on medium.

- Want to get into AI or improve your skills, read this!

- Learn AI together, join our Discord community, share your projects, papers, best courses, find Kaggle teammates, and much more!

References

[1] M. Lechner, R. Hasani, A. Amini, T. A. Henzinger, D. Rus and R. Grosu, Neural circuit policies enabling auditable autonomy, (2020), nature machine learning

[2] M. Lechner and R. Hasani, Code for Neural Circuit Policies Enabling Auditable Autonomy, (2020), GitHub

[3] M. Lechner and R. Hasani, Google Colab: Basic usage, (2020), Google Colab

[4] M. Lechner and R. Hasani, Google Colab: Stacking NCPs with other layers, (2020), Google Colab