2025: The Year of AI Agents

Million-Token Context? Cheap Tools? Perfect Time for Agents

Watch the video!

Have you noticed how every slide deck, keynote, LinkedIn post and podcast this year highlight the same thing: 2025 is the year of AI agents.

This feels like the usual AI hype, but I wanted to know the foundation behind all this buzzword use once again, so I dug in. What I found is that the excitement, that is by the way shared amongst the most renown personas in the industry, is actually grounded in eight very practical shifts, and the best way to understand them is to start from the very bottom, with a lonely language model that can do nothing more than predict the next token.

On its own, a model just chats. We give it a prompt, it spits back text, and that’s the end of the story. It can’t reach for a calculator, can’t browse the web, can’t remember who you are, and certainly can’t go click a download button when you ask it to install Word.

So, for years, we kept bolting things on — retrieval for fresh knowledge, code interpreters for math and data, long-term memories for user context. These bolt-ons are what we usually bundle under the label “tools” inside a “workflow.” A workflow is simply you, or me hard-coding a chain of steps: maybe we fetch documents, maybe we let the model write SQL, maybe we pass the result back for summarization. It can get crazy elaborate — routers like notdiamond that decide which model to call, majority-vote ensembles that negotiate the best answer, which is when you ask the same question to a few models, or the same model multiple times, and aggregate the answers to get the best one, or add feedback loops where a second model acts as a judge — but every branching path is still traced out by a developer ahead of time with clear prompts or conditions the system follows.

That rigidity is fine as long as the problem stays predictable, but the moment we need the system itself to choose tools, adjust its plan mid-flight, or cope with surprises in its environment, we need something more autonomous.

That’s where agents walk in.

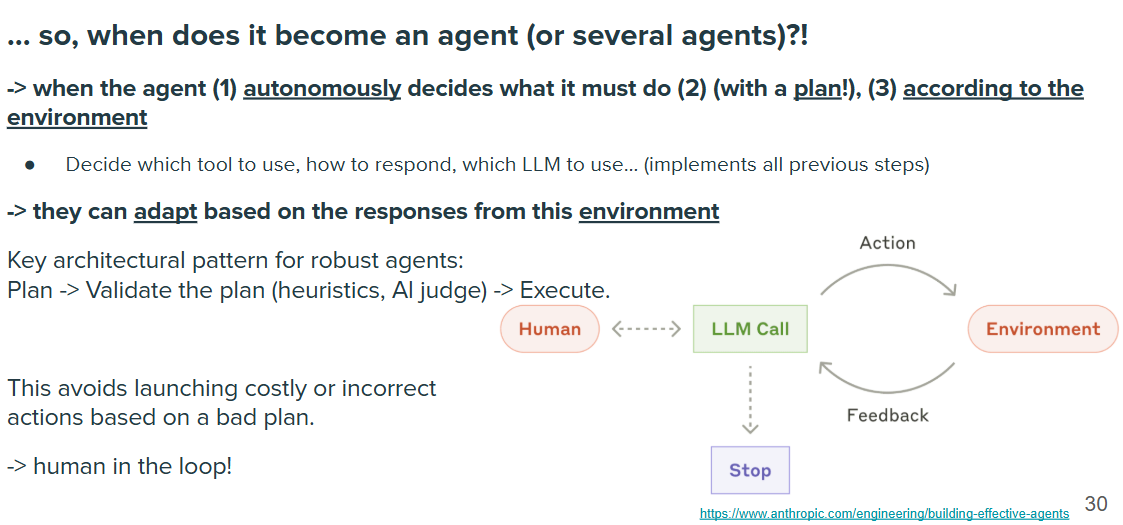

The industry agrees that a workflow becomes an agent when it follows three core principles: being something that (1) autonomously decides what it must do (2) (with a plan!), (3) according to the environment.

Give an agent a high-level goal and a toolbox, and — instead of us routing every request by hand — the agent sketches its own plan, calls the tools it THINKS it needs, rewrites the plan when a pop-up blocks a web page or when something unexpected happens, and keeps a memory of what just happened so the next step makes sense. For example, you wantr it to remember the log ins for a platform it used yesterday, so it needs to store and retrieve that somewhere, while also needing to know it has a memory and know it can look in there if there’s some kind of log in requested. See how it’s all much more autonomous, or could we say “human”?

Think of Devin, the AI software engineer that resolves real GitHub issues end-to-end; it builds, tests, debugs, and revises code in a terminal loop the way a junior developer would, not because someone spelled out every keystroke, but because the agent reasons through the task on its own. You can almost see an agent as replacing someone rather than a pure tool, as with a workflow.

There’s also Julius, the AI Data Analyst, a perfect real-world example of what we just defined. Julius combines four core capabilities that line up exactly with the agentic theory: perception, reasoning & planning, action, and feedback. It perceives every kind of input you throw at it — text, code, tables, database, spreadsheets, even images — and turns them into structured data it can reason with. Then it plans out next steps using both symbolic logic and large-scale language modelling, remembering every detail of the conversation so it doesn’t lose the thread. When it needs to act, Julius doesn’t ask you to micromanage; it just calls the right tool, whether that’s running Python, installing a library, scraping a website, or generating a visualization. And after each step it checks its own work, figures out what went right or wrong, and adjusts — closing the loop automatically. For example, I uploaded an export of my user data for each landing page on our Towards AI Academy platform and just asked it to help me understand which page worked and which didn’t. It then studied the whole file, ran experiments by itself to digest the data and gave me back super useful details on the landing pages themselves. But what’s even cooler are these final takeaways that I could directly apply to improve our platform conversion. That perception-planning-action-feedback cycle is precisely what separates an autonomous agent from a fancy chatbot, and Julius delivers it out of the box.

Of course, autonomy is expensive. Agents read and write many more tokens, run more sub-models, and need stronger safety nets. So before you try to implement one, you may want to use this checklist:

First, is the task complex enough that the variability is worth the cost? Will a wrong turn be catastrophic or just mildly annoying? Because even if you have 99% accuracy with your models, agentic systems will loop over and over and make many more interactions, which will eventually lead to errors.

Second, is the problem complex enough? Usually, a workflow is enough and this term is just used to spread hype. For example, does the agent need multiple tools that you don’t know when it should or shouldn’t use it in advance?

Third, if you don’t exactly know the exact process to follow step by step or exactly what style the user wants to receive, then the autonomy the agents bring can become quite interesting.

And lastly, do you have the budget to let the thing think? Agents will indeed use way more tokens just by coming up with a plan, iterating with it, trying out different parths, etc. It’s way more costly and has more randomness than just pure LLM calls in a clear workflow.

Anthropic shared a nice slide about this, saying that if the tax is complex enough, adds value, is doable, and the error cost is low, then it’s a potential agentic solution.

Likewise, they had another slide demonstrating these points with their agent product Claude code, which is basically Claude with terminal access. Here, they sharer that the complexity is good enough since solving github issues and making PRs is quite complex, it adds value (just think of the engineers’ salaries), it has potential since models like Claude are trained on lots of code and, most importantly, the cost of error is low since there are unit tests and approval in place to make sure nothing too dramatic happens.

So, the moment those answers tilt toward “yes,” like in Claude code’s case, an agent becomes compelling — and 2025 is the tipping point because eight background trends have finally matured at once. Just keep in mind that, unless you know your problem is too complex, yet you also know that LLMs are powerful enough to ultimately do the task, which is a very tight and complicated middleground to reach, you typically want to start with simple workflows and grow from there! Otherwise, building agents is pretty much a business in itself, and not just a tiny side project!

Alright, now that we’ve seen what are agents and why they exist, let’s dive into these 8 reasons I promised explaining why they are starting to come out now:

First, model quality improvements from pre-training alone are flattening out; benchmarks like MMLU keep inching forward, but not at the break-neck pace of 2023 and early 2024. That plateau pushes researchers to squeeze more juice at inference time, which is at the moment the model exchanges with users, through planning and tool use — classic agent territory.

Second, all models are becoming quite similar: Gemini, Claude 4, GPT, DeepSeek, Mixtral — their scores are converging, which means you can swap them in and out of an agentic system without rewriting half your stack and prompts.

Third, context windows exploded into the million-token range, so an agent can carry its rolling memory, a browser dump, and a chunk of company policy all at once, instead of juggling them in and out of short-term memory.

Fourth, token prices keep dropping while, fifth the generation speeds keep growing, shrinking the premium you pay for those long, tool-heavy conversations.

Sixth, we now have reasoning-optimized models, architectures that deliberately spend more compute during inference. They are now standard offerings rather than research toys, so agents can inspect, critique, and refine their own drafts without killing latency. I made a video about reasoning models if you’d be interested in learning more how they are made and how they work!

Seventh, the tool ecosystem grows exponentially faster: OpenAI’s structured-output hits perfect reliability, and Google’s Agent-to-Agent Protocol landed in April to let agents from different vendors talk over a shared standard, while Anthropic’s open-sourced Model Context Protocol, or MCP, gave us a plug-and-play way for models to reach file systems, cloud drives, and business apps, so wiring up new tools is suddenly a weekend project instead of a month of glue code. We also covered the MCP and agent-to-agent protocols in another video recently if you’d like to learn more about these.

And eighth — the quiet but crucial one — best-practice safety tooling matured: automated judges, human-in-the-loop reviews, red-team simulators. The cost of an error is no longer a show-stopper because we have systematic ways to catch the worst failures on the fly.

Here’s the recap:

Put together, these shifts make autonomy the next plausible path for all developers to try implementing. Workflows still rule for simple jobs, and they should — always pick the simplest thing that could work. But when the decision tree is too tangled to hard-code, when your tool list changes every sprint, when your user base expects bespoke answers and remembers how you spoke to them yesterday, an agent shines. Give it a goal, a memory, and the keys to your toolkit, then let it decide whether to call a search API, fire off a SQL query, or draft an email on your behalf. Validate its plan with automated checks first and human review for the scary steps, and then hit execute and watch it loop until the job is done.

That looping autonomy is why 2025 feels different. A year ago, agents were flashy demos that didn’t work so well, like when Devin exploded but then disappointed everyone. Today, they’re sliding into real product back-ends: Devin ships fixes in enterprise CI pipelines even though it’s too complex to work all the time, we see new systems like Manus every few weeks and Claude’s MCP-powered agents are popping everywhere.

The ground truth is simple: the primitives — cheap tokens, wide context, interoperable tools — finally caught up with the vision. And that means the buzzword “agent” is about to fade into the background the same way “LLMs” did; we’ll just assume software can sense, plan, and act. So when the next presentation declares this is the year of agents, know that it’s not just hype. Even though there is always a bit too much hype in this field. It’s your reminder that the infrastructure is ready, and from now on, autonomy is growingly a viable option.

I hope you’ve enjoyed this article! If you’d like to learn more about agents and build your own, please check out our agents course on the Towards AI Academy made in partnership with my friend at Decoding ML. We teach you to build agents from the ground up! Check it with the first link below.

If you’d like to have an agent do all your analysis, try it out with the first link below! And thank you for reading— I’ll see you in the next one!