DeepMind uses AI to Predict More Accurate Weather Forecasts

50+ expert meteorologists assessed DeepMind's new model beating current nowcasting methods in 89% of situations for its accuracy and usefulness

Watch the video

You’ve most certainly planned a trip to the beach for the day, checked the weather before going, which said it was sunny, and just when you arrived, it started raining. This or a similar situation happened to all of us. We always talk about the weather. There are two big reasons for that: it has a big impact on our lives and activities, and we sometimes have nothing better to talk about. A common agreement is that it seems like weather forecasts in the next few hours are completely random. Especially when it comes to rain prediction.

Well, there’s a reason for that. It’s actually highly complex. These short-term weather predictions are called Precipitation Nowcasting and are made using various methods to predict what will happen in the next two hours. These methods are driven by powerful numerical weather prediction systems predicting the weather by solving physical equations. They are quite powerful for long-term predictions but struggle to find fine-grain forecasts above your head at a specific time of the day. It’s just like statistics. It is easy to predict what an average human will do in a situation but quite impossible to predict what a particular individual will do. If you’d like to get deeper into these mathematical models, I already explained how they work in more detail in my video about global weather prediction using deep learning. Even though we have a lot of radar data to predict what will happen, the mathematical and probabilistic-based methods fail to be precise. You can see where this is going. When there’s data, there’s AI.

Indeed, this lack of precision may change in the future, and in part because of DeepMind. DeepMind just released a Generative model able to outperform widely-used nowcasting methods in 89% of situations for its accuracy and usefulness assessed by more than 50 expert meteorologists! Their model focuses on predicting precipitations in the next 2 hours and achieves that surprisingly well. As we just saw, it is a generative model, which means that it will generate the forecasts instead of simply predicting them. It basically takes radar data from the past to create future radar data. So using both time and spatial components from the past, they can generate what it will look like in the near future.

You can see this as the same as Snapchat filters, taking your face and generating a new face with modifications on it. To train such a generative model, you need a bunch of data from both the human faces and the kind of face you want to generate. Then, using a very similar model trained for many hours, you will have a powerful generative model. This kind of model often uses GANs architectures for training purposes and then uses the generator model independently. If you are not familiar with generative models or GANs, I invite you to watch one of the many videos I made covering them, like this one about Toonify.

One of the most basic architectures to achieve image generation is called a UNet. It basically takes an image, or past radar data, in this case, encodes it using trained parameters, and takes this encoded information to generate a new version of the same image, which in our case would be the same radar data of the next few minutes.

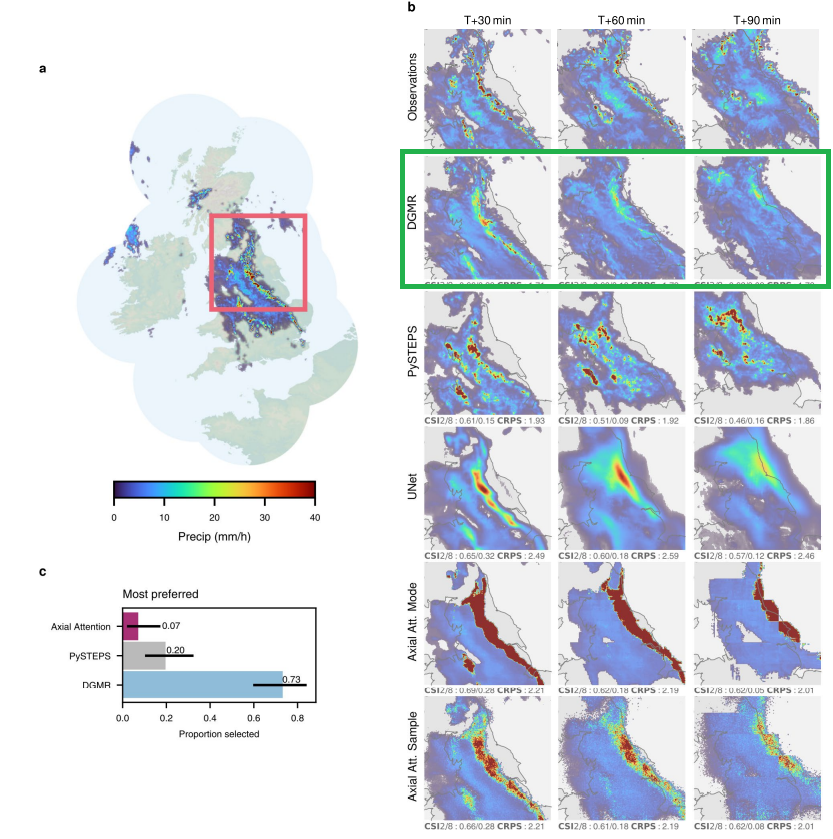

Here’s what it looks like when feeding a typical UNet with forecast data compared to what it should look like, the target.

You can see that it is relatively good, but not really precise and surely not enough to be used in our daily lives. Here’s a comparison with the currently used numerical weather prediction approach like PySTEPS. It’s a bit better, but you can see that it is not perfect either. We cannot really further improve the probabilistic methods using math equations, so trying different approaches becomes interesting. Also, the fact that we have a lot of radar data to train our models is quite encouraging for the deep learning approaches.

This is why DeepMind successfully created a GAN-like architecture made explicitly for this task. And here are the results. You can see how much closer it is to reality with more fine-grain details. Really impressive! They achieved that by using both time and spatial components from the past radar data to generate what the radar data could look like in the near future.

By the way, if you find this interesting, I invite you to share the knowledge by sending this article to a friend. I’m sure they will love it, and they will be grateful to learn something new because of you! And if you don’t, no worries, thank you for reading!

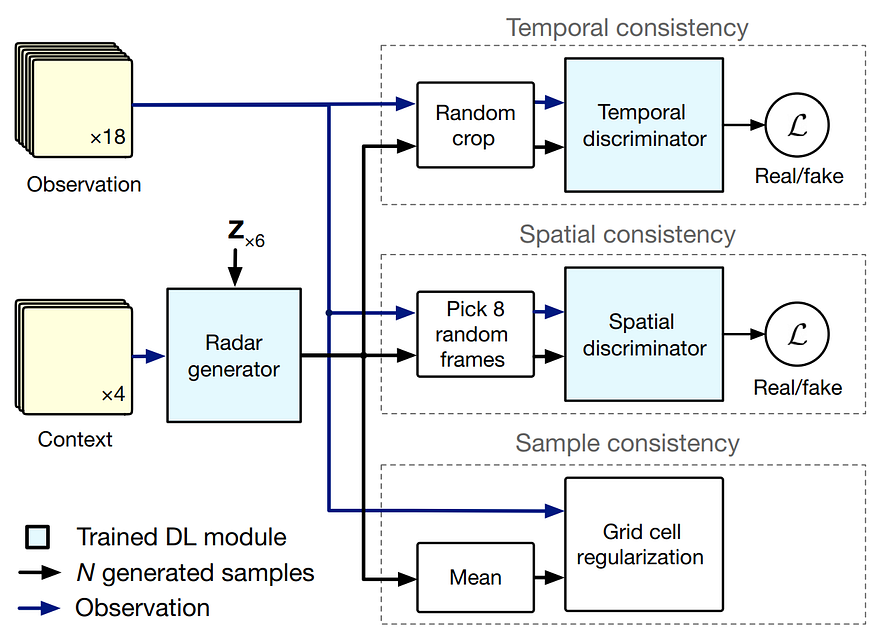

DeepMind’s model

So more precisely, the previous 20 minutes radar observations are sent to the model to generate 90 minutes of possible future predictions. It is trained just like any GAN architecture guiding the learning process by penalizing the difference between the generated radar predictions and the real predictions, which we have in our dataset for training. As you can see here, there are two losses and a regularization term, which are the penalties that will lead our model during training. The first one is a temporal loss. This temporal loss will force the model to be consistent in its generation over multiple frames by comparing them with the real data over a specific amount of time (or frames) to be temporarily realistic. This will remove weird jumps or inconsistency over time that couldn’t happen in the real world. The second loss is the same thing but for spatial purposes. It ensures spatial consistency by comparing the actual radar data versus our generated prediction at a specific frame. In short, it will force the model to be “spatially intelligent” and produce confident predictions instead of large blurry predictions like we saw for UNet. The last term and not least is the regularization term. It will penalize differences in the grid cell resolution between the real radar sequences and our predictions using many examples at a time instead of comparing the predictions one by one like the two losses. This will improve performance and produce more location-accurate predictions.

So you will send some observations, get their predictions, compare them with the real radar data you have with these three measurements we just covered, and update your model based on the differences. Then, you repeat this process numerous times with all your training data to end up with a powerful model that learns how the weather is changing and can ideally accurately generalize this behavior to most new data it will receive afterward.

You can see in the examples above how accurate the results are where UNet is accurate but blurry, and the numerical method overestimates the rainfall intensity over time. As they say, no method is without limitations, and theirs struggle with long-term predictions, and just like most deep learning applications, they also struggle with rare events that do not frequently appear in the training datasets, which they will work on improving.

Of course, this was just an overview of this new paper attacking the super interesting and useful task of precipitation nowcasting.

I invite you to read their excellent paper for more technical detail about their implementation, training data, evaluation metrics, and the expert meteorologist study. They also made a Colab Notebook you can play with to generate predictions. Both are linked in the references below. Thank you very much for reading for those of you who are still here, and I will see you next week!

If you like my work and want to stay up-to-date with AI, you should definitely follow me on my other social media accounts (LinkedIn, Twitter) and subscribe to my weekly AI newsletter!

To support me:

- The best way to support me is by being a member of this website or subscribe to my channel on YouTube if you like the video format.

- Support my work financially on Patreon.

- Follow me here on medium or here on my blog.

- Want to get into AI or improve your skills, read this!

References

- Ravuri, S., Lenc, K., Willson, M., Kangin, D., Lam, R., Mirowski, P., Fitzsimons, M., Athanassiadou, M., Kashem, S., Madge, S. and Prudden, R., 2021. Skillful Precipitation Nowcasting using Deep Generative Models of Radar, https://www.nature.com/articles/s41586-021-03854-z

- Colab Notebook: https://github.com/deepmind/deepmind-research/tree/master/nowcasting