Toonify: Transform Faces into Disney animated movie Characters

This AI can transform any of your pictures into an accurate representation with a Disney animated movie character style!

Watch the video and support me on YouTube!

You can even use it yourself right now with a simple click using Toonify. But before testing it out, let’s quickly see how it works.

Using a publicly available pre-trained model, called StyleGan2, they were able to create Toonify. A website transforming any picture taken from a profile into a Disney/Pixar/Dreamworks animated movie character.

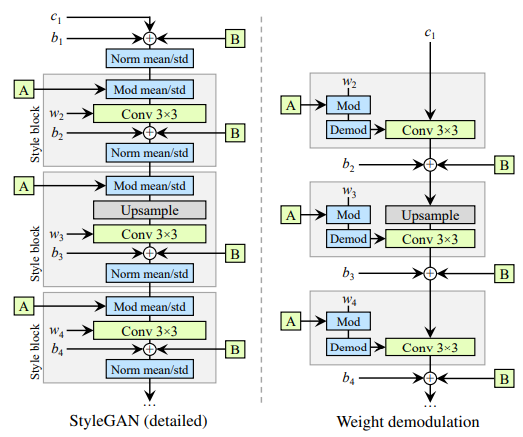

StyleGAN2 is a GAN architecture model made by the NVIDIA research team.

It currently has state-of-the-art results in generative image modeling. In short, they used the original StyleGAN architecture and improved it to improve the style-related results.

This network is a generative adversarial network merged with style transfer. Style transfer is a technique used to change the style of a whole image based on the different styles it was trained on, as you can see here.

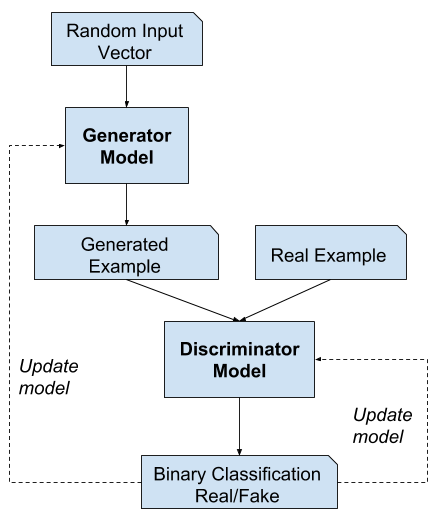

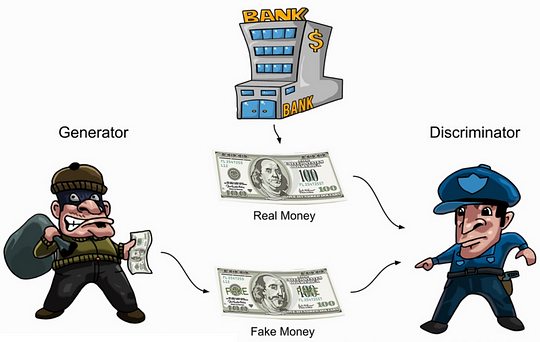

The generative adversarial network, what we call GAN is then the base architecture of the network. It is basically composed of two main parts. At first, there’s what we call a generator network, which learns high-level attributes of the human faces it is trained on, such as pose and identity of the person, to control the image synthesis process. This is where it learns the “style” of the images fed, such as a “human” style, or a “Disney cartoon” style. Then, to train this generator, we need the second part of the GAN architecture,

which is a discriminator. In short, both generated and real images are fed to the discriminator during training, which tries to “discriminate” the real images from the generated ones. Based on this comparison’s feedback, the generator network can iteratively improve the quality of the fake images, and fool the discriminator.

As I said, Toonify used a pre-trained model, which is a model that has already been trained on many images yielding good results. Then, they fine-tuned it by training their generator again with 300 more animated movie character pictures related to Disney, Pixar, and Dreamworks world.

Then, merging specific parts of two different GAN networks, such as one trained on human faces, and another one trained on animated movie characters, they were able to create a hybrid which has the structure of a cartoon face, but photorealistic rendering. This gives a high-quality cartoon’s style look to any person, and not any cartoon-style, but the one it has been trained one, which in this case are Disney, Pixar, and Dreamworks movie characters. You could do these same steps on any other cartoon styles if you have enough images of this specific style.

The one impressive thing here is what they’ve done by merging the two networks.

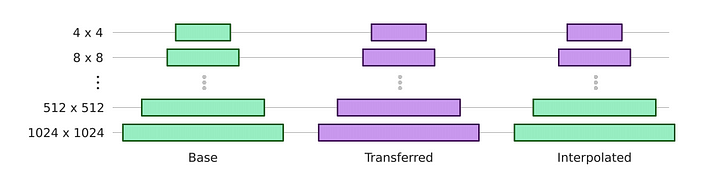

In short, they understood that the first few layers were responsible for the pose of the head and shape of the face, which is controlled by the human faces GAN-trained network. Then, they saw that the latter layers were more about controlling things like lighting and texture, which is what we are looking for with the cartoon images. Allowing them to generate images from an entirely novel domain and do this with a high degree of control.

Meaning that they could conserve the property of the human face it was fed with, and generate a cartoon version of it, with a specific style, such as Disney characters. Rather than generating images with random properties that are not really following the shapes and “soul” of the image fed, like most approaches. They called this the “layer swapping” technique in their paper, which I linked in the description as well as the Toonify website where you can try to cartoon yourself or any picture you want.

You can use Toonify right now, by clicking here, if you would like to try it out on your own pictures, or any you may think of.

A quick note from the authors before testing this yourself:

— To produce great results, the original image must be of fairly high quality, such as around 512 per 512 pixels for the face.

— Side faces and faces of under 128 by 128 pixels are unlikely to be found by the network.

Have fun, and please share your results in the comment section with a link to your images or tag me on Twitter using @whats_ai. I would love to see them!

If you like my work and want to support me, I’d greatly appreciate it if you follow me on my social media channels:

- The best way to support me is by following me on Medium.

- Subscribe to my YouTube channel.

- Follow my projects on LinkedIn

- Learn AI together, join our Discord community, share your projects, papers, best courses, find Kaggle teammates, and much more!

References:

Toonify: https://gumroad.com/a/391640179

StyleGAN2: https://github.com/NVlabs/stylegan2

Paper: https://arxiv.org/pdf/2010.05334.pdf

Google Colab for Toonify: https://colab.research.google.com/drive/1s2XPNMwf6HDhrJ1FMwlW1jl-eQ2-_tlk?usp=sharing#scrollTo=tcWXgS5DXata