CVPR 2022 Best Paper Honorable Mention: Dual-Shutter Optical Vibration Sensing

They reconstruct sound using cameras and a laser beam on any vibrating surface, allowing them to isolate music instruments, focus on a specific speaker, remove ambient noises, and many more amazing applications.

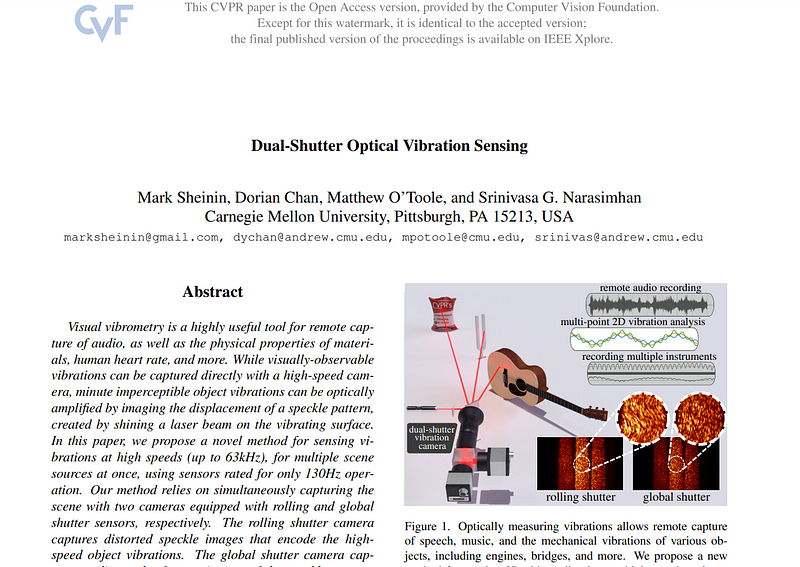

This year I had the chance to be at CVPR in person and attend the amazing best paper award presentation with this fantastic paper I had to cover on the channel called Dual-Shutter Optical Vibration Sensing by Mark Sheinin, Dorian Chan, Matthew O’Toole, Srinivasa Narasimhan.

In one sentence: They reconstruct sound using cameras and a laser beam on any vibrating surface, allowing them to isolate music instruments, focus on a specific speaker, remove ambient noises, and many more amazing applications.

Let’s dive into how they achieve that and hear some crazy results.

You should watch the video and hear the results, or go on their website and listen to the examples…

In the first example shown in the video, you could clearly hear the two individual guitars on each audio track.

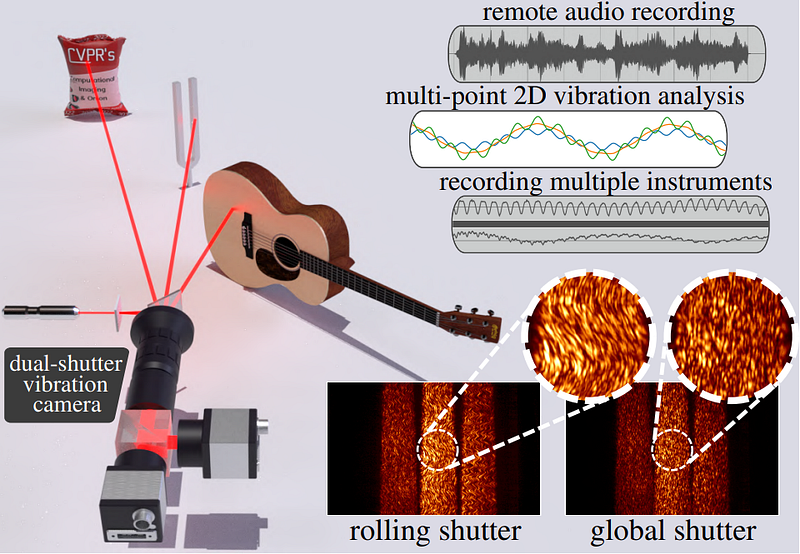

This was made using not the recorded sound but a laser and two cameras equipped with rolling and global shutter sensors, respectively. It seems like tackling this task through vision makes it much easier than trying to split the audio tracks after recording. More — it also means we can record anything through glasses and from any vibrating objects. Here, (see video) they used their method on the speakers themselves to isolate the left and right speakers — whereas a microphone will automatically record both and blend the audio tracks.

Typically, this kind of spy technology, called visual vibrometry, requires perfect lighting conditions and high-speed cameras that look like a camouflaged sniper to capture high-speed vibrations of up to 63kHz. Here, they achieve similar results with sensors built for only 60 and 130 Hz! And even better: they can process multiple objects at once!

Still, this is a very challenging task requiring a lot of engineering and great ideas to make it happen. They do not simply record the instruments and send the video to a model that automatically creates and separates the audio. They first need to understand the laser they receive and process it correctly.

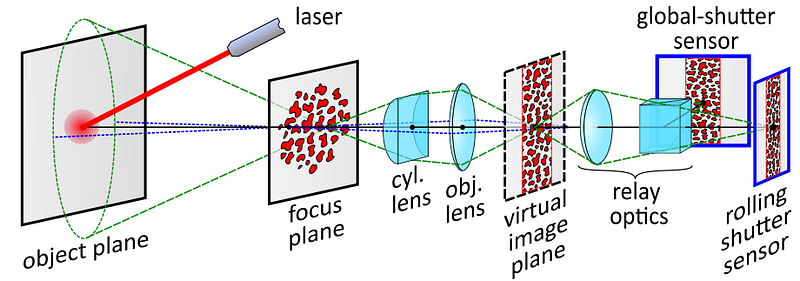

They orient a laser on the surface to listen to. Then, this laser bounces from the surface into a focus plane. This focus plane is where we will take our information from, not the instruments or objects themselves. So we will only analyze the tiny vibrations of the objects of interest through the laser response creating a representation like this:

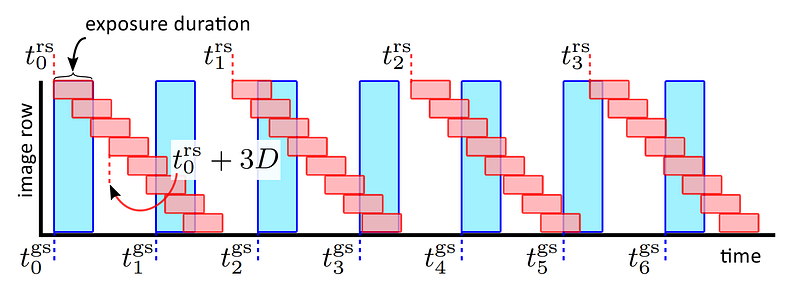

This 2-dimensional laser response pattern caught by our cameras called a speckle, is then processed both globally and locally using our two cameras. Our local camera, or rolling-shutter camera, will capture frames at only 60 fps, so take multiple pictures and roll them on the y axis to get a really noisy and inaccurate 63khz representation.

This is where the global shutter camera is necessary because of the randomness in the speckle imaging due to the roughness of the object’s surface and movements. It will basically take a global screenshot of the same speckle image we used with our first camera and use this new image as a reference frame to isolate only relevant vibrations from the rolling-shutter captures. The rolling-shutter camera will sample the scene row by row with a high frequency, while the global-shutter camera will sample the entire scene at once to serve as a reference frame, and we repeat this process for the whole video.

And voilà!

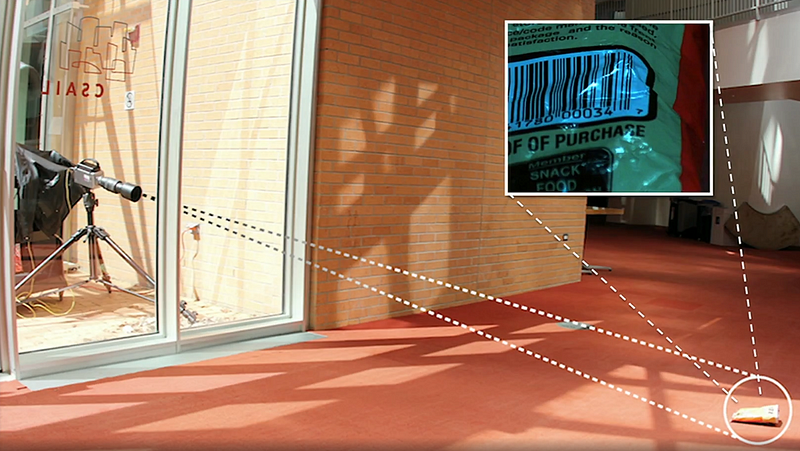

This is how they are able to split sound from a recording, extract only a single instrument, remove ambient noise or even reconstruct speech from the vibrations of a bag of chips (see video).

Of course, this is just a simple overview of this great paper, and I strongly invite you to read it for more information, linked below. Congratulations to the authors for the honorable mention. I was glad to attend the event and see the presentation live. I’m super excited to see the future publications this paper will motivate.

I also invite you to double-check all the bags of chips you may leave near a window; otherwise, some people may listen to what you say!

Thank you for reading the whole article. Let me know how you’d apply this technology and if you see any potential risks or exciting use-cases. I’d love to discuss this with you.

And a special thanks to CVPR for inviting me to the event. It was really cool to be there in New Orleans with all the researchers and companies.

I will see you next week with another amazing paper!

References

►Sheinin, Mark and Chan, Dorian and O’Toole, Matthew and Narasimhan, Srinivasa G., 2022, Dual-Shutter Optical Vibration Sensing, Proc. IEEE CVPR.

►Project page: https://imaging.cs.cmu.edu/vibration/

►My Newsletter (A new AI application explained weekly to your emails!): https://www.louisbouchard.ai/newsletter/