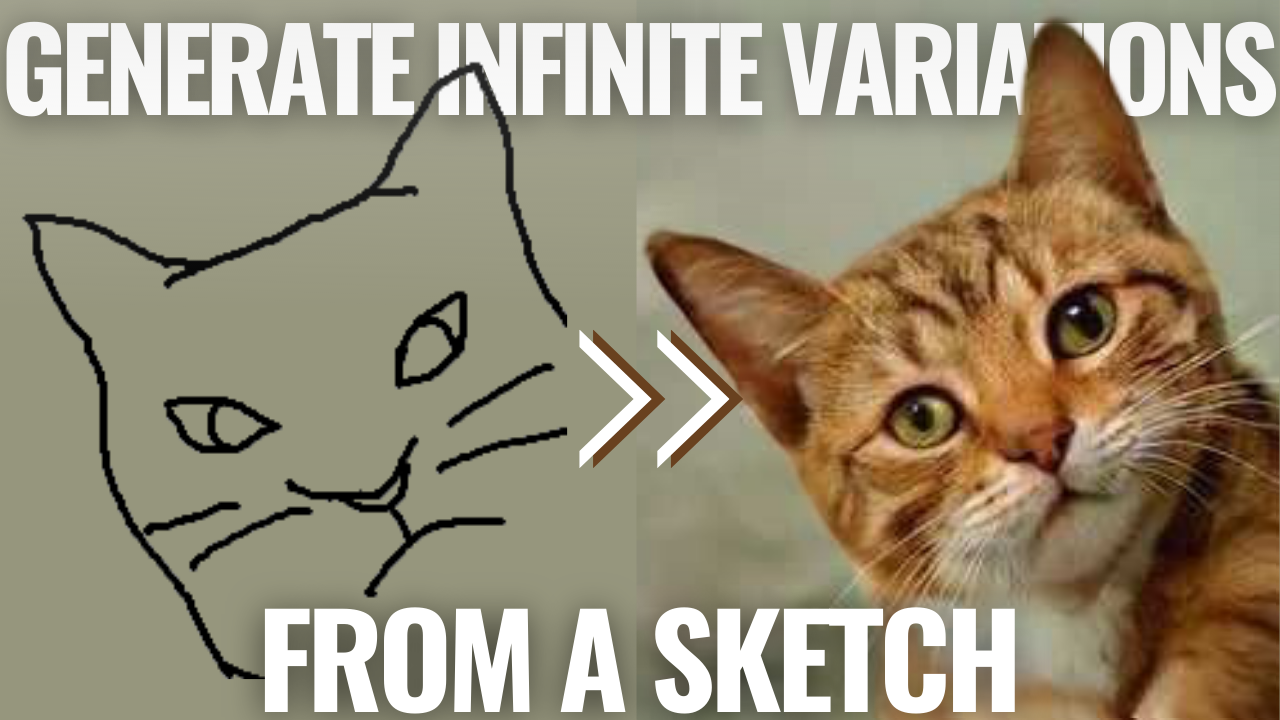

Make GANs Training Easier for Everyone : Generate Images Following a Sketch

Control GANs outputs based on the simplest type of knowledge you could provide it: hand-drawn sketches.

Watch the video and support me on YouTube!

Generative Adversarial Networks

Machine learning models can now generate new images based on what it has seen from an existing set of images. We can’t really say that the model is creative, as even though the image is indeed new, the results are always highly inspired by similar photos it has seen in the past. Such a type of architecture is called a generative adversarial network, or GAN. If you already know how GANs work, you can skip to the next section to learn what the researchers did new. If not, I’ll quickly go over how it works.

This powerful architecture basically takes a bunch of images and tries to imitate them. There are typically two networks, the generator, and the discriminator. Their names are pretty informative… The generator tries to generate a new image, and the discriminator tries to discriminate such images.

The training process goes as follows: the discriminator is shown either an image coming from our training dataset, which is our set of real images, or an image made by the generator called a fake image. Then, the discriminator tries to say whether the image was real or fake. If the image sent guessed real was fake, we say that the discriminator has been fooled, and we update its parameters to improve its detection ability for the next try. In reverse, if the discriminator guessed right, saying it was fake, the generator is penalized and updated the same way, thus improving the quality of the future generated image. This process is repeated over and over until the discriminator is fooled half the time, meaning that the generated images are very similar to what we have in our real dataset. So the generated images now look like they were picked from our dataset, having the same style.

If you’d like to have more details about how a generator and a discriminator model work and what they look like on the inside, I’d recommend reading one of the many articles I made covering them, like this one about Toonify.

The problem here is that this process has been a black box for a while, and it is extremely difficult to train, especially to control what kind of images are generated. There has been a lot of progress in understanding what part of the generator network is responsible for what.

Sketch Your Own GAN [1]

Traditionally, building a model with control on the generated images’ style to produce what we want, like generating images of cats with a specific position,

needs specialized knowledge in deep learning, engineering work, patience, and a lot of trial and error. It would also need a lot of image examples, manually curated, of what you aim to generate and a great understanding of how the model works to adapt it for your own needs correctly. And repeat this process for any change you would like to make.

Instead, this new method by Sheng-Yu Wang et al. from Carnegie Mellon University and MIT called Sketch Your Own GAN can take an existing model, for example, a generator trained to generate new images of cats,

and control the output based on the simplest type of knowledge you could provide it: hand-drawn sketches. Something anyone can do, making GANs training a lot more accessible. No more hard work and model tweaking for hours to generate the cat in the position you wanted by figuring out which part of the model is in charge of which component in the image!

How cool is that? It surely at least deserves sharing this article in your group chat! ;) Of course, there’s nothing special in generating a cat in a specific position, but imagine how powerful this can be. It can take a model trained to generate anything, and from a handful of sketches, control what will appear while conserving the other details and the same style! It is an architecture to re-train a generator model, encouraging it to produce images with the structure provided by the sketches while preserving the original model’s diversity and the maximum image quality possible. This is also called fine-tuning a model, where you take a powerful existing model and adapt it to perform better for your task.

Imagine you really wanted to build a gabled church but didn’t know the colors or specific architecture? Just send the sketch to the model and get infinite inspiration for your creation! Of course, this is still early research, and it will always follow the style in your dataset you used to train the generator, but still, the images are all *new* and can be surprisingly beautiful!

But how did they do that? What have they figured out about generative models that can be taken advantage of to control the output?

There are various challenges for such a task, like the amount of data and the model expertise needed. The data problem is fixed by using a model that was already trained, which we are simply trying to adapt to our task using a handful of sketches instead of hundreds or thousands of sketches and image pairs which are typically needed. To attack the expertise problem, instead of manually figuring out the changes to make to the model, they transform the generated image into a sketch representation using another model trained to do that, called Photosketch. Then, the generator is trained similarly to a traditional GAN training but with two discriminators instead of one.

The first discriminator is used to control the quality of the output, just like a regular GAN architecture would have following the same training process we described earlier.

The second discriminator is trained to tell the difference between the generated sketches and the sketches made by the user. Thus encouraging the generated images to match the user sketches structure similarly to how the first discriminator encourages the generated images to match the images in the initial training dataset.

This way, the model figures out by itself which parameters to change to fit this new task of imitating the sketches and removing the model expertise requirements to play with generative models.

This field of research is exciting, allowing anyone to play with generative models and control the outputs. It is much closer to something that could be useful in the real world than the initial models, where you would need a lot of time, money, and expertise to build a model able to generate such images. Instead, from a handful of sketches anyone can do, the resulting model can produce an infinite number of new images that resemble the input sketches allowing many more people to play with these generative networks.

Let me know what you think and if this seems as exciting to you as it is to me! If you’d like more detail on this technique, I’d strongly recommend reading their paper linked below!

Thank you for reading!

Come chat with us in our Discord community: Learn AI Together and share your projects, papers, best courses, find Kaggle teammates, and much more!

If you like my work and want to stay up-to-date with AI, you should definitely follow me on my other social media accounts (LinkedIn, Twitter) and subscribe to my weekly AI newsletter!

To support me:

- The best way to support me is by being a member of this website or subscribe to my channel on YouTube if you like the video format.

- Support my work financially on Patreon

References:

- Sheng-Yu Wang et all, “Sketch Your Own GAN”, 2021, https://arxiv.org/pdf/2108.02774v1.pdf

- Project link: https://peterwang512.github.io/GANSketching/

- Code: https://github.com/PeterWang512/GANSketching