The AI Monthly Top 3 — September 2021

The 3 most interesting AI papers this month with video demos, short articles, code, and paper reference.

Here are the 3 most interesting research papers of the month, in case you missed any of them. It is a curated list of the latest breakthroughs in AI and Data Science by release date with a clear video explanation, link to a more in-depth article, and code (if applicable). Enjoy the read, and let me know if I missed any important papers in the comments, or by contacting me directly on LinkedIn!

If you’d like to read more research papers as well, I recommend you read my article where I share my best tips for finding and reading more research papers.

Paper #1:

Styleclip: Text-driven manipulation of StyleGAN imagery [1]

AI could generate images, then, using a lot of brainpower and trial and error, researchers could control the results following specific styles. Now, with this new model, you can do that using only text!

Watch the video

A short read version

Code (use with local GUI or colab notebook): https://github.com/orpatashnik/StyleCLIP

Paper #2:

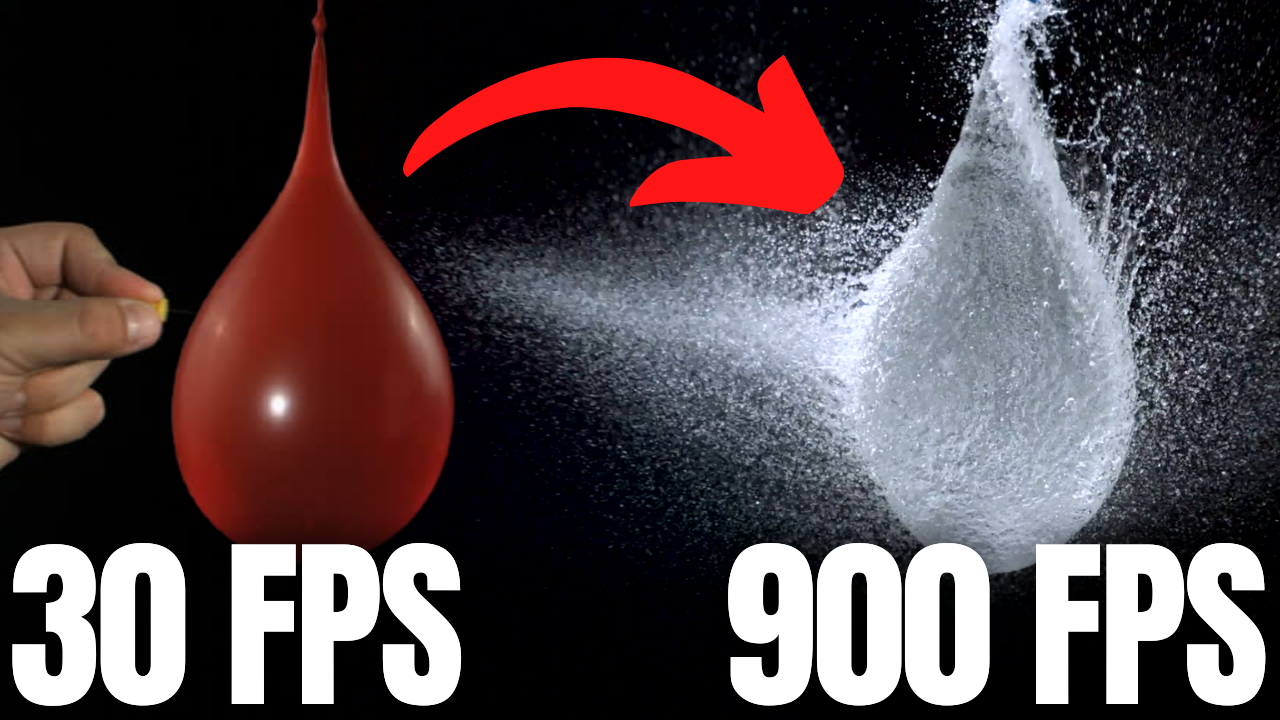

TimeLens: Event-based Video Frame Interpolation [2]

TimeLens can understand the movement of the particles in-between the frames of a video to reconstruct what really happened at a speed even our eyes cannot see. In fact, it achieves results that our intelligent phones and no other models could reach before!

Watch the video

A short read version

Official code: https://github.com/uzh-rpg/rpg_timelens

Paper #3:

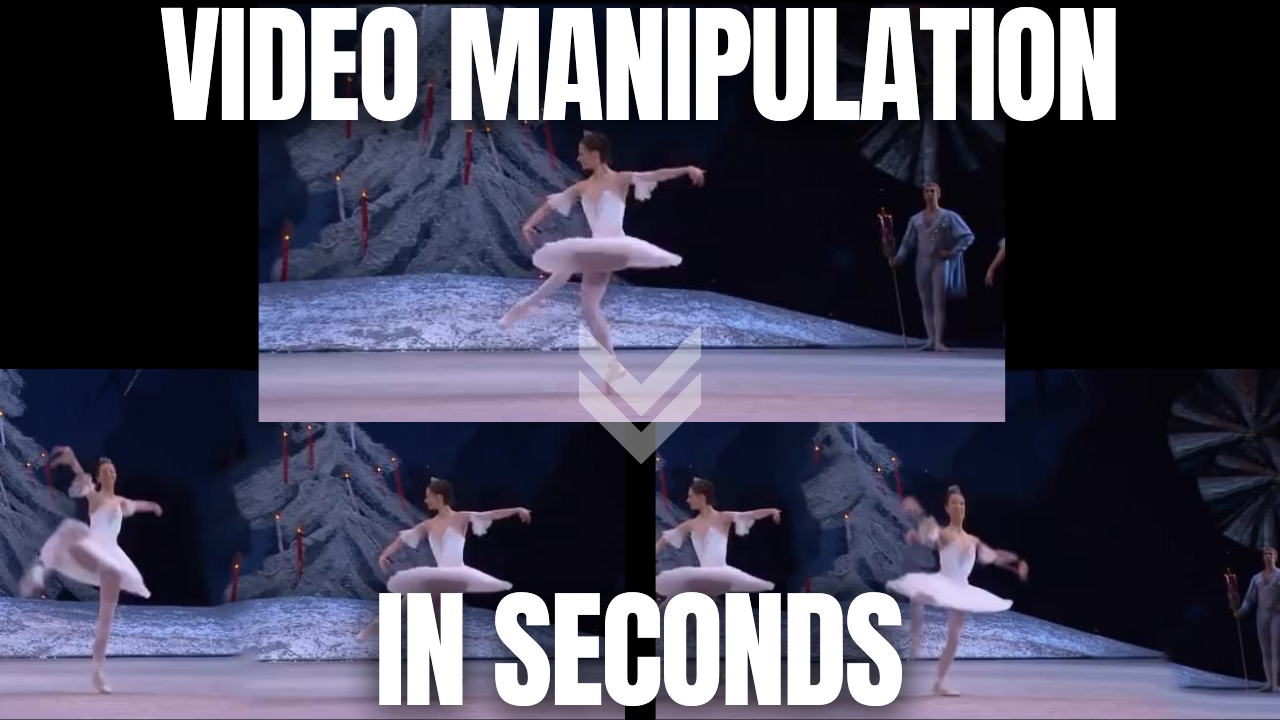

Diverse Generation from a Single Video Made Possible [3]

Have you ever wanted to edit a video?

Remove or add someone, change the background, make it last a bit longer, or change the resolution to fit a specific aspect ratio without compressing or stretching it. For those of you who already ran advertisement campaigns, you certainly wanted to have variations of your videos for AB testing and see what works best. Well, this new research by Niv Haim et al. can help you do all of these out of a single video and in HD!

Indeed, using a simple video, you can perform any tasks I just mentioned in seconds or a few minutes for high-quality videos. You can basically use it for any video manipulation or video generation application you have in mind. It even outperforms GANs in all ways and doesn’t use any deep learning fancy research nor requires a huge and impractical dataset! And the best thing is that this technique is scalable to high-resolution videos.

Watch the video

A short read version

Code (available soon): https://nivha.github.io/vgpnn/

Bonus for AI professionals

8 Tools Anyone in AI Should Know

Two years ago, I saw my first research paper ever. I remember how old it looked and how discouraging the mathematics inside was. It really did look like what the researchers worked on in movies. To be fair, the paper was from the 1950s, but it hasn’t changed much since then. Fast-forward to this day, I’ve gained a lot of experience reading them after reading a few hundred papers in the last year for my youtube channel, where I try to explain them simply. Still, I know how overwhelming a first read can be, especially the first read of your first research paper. This is why I felt like sharing my best tips and practical tools I use daily to simplify my life and be more efficient when looking for interesting research papers and reading them.

Here are the most useful tools I use daily as a research scientist for finding and reading AI research papers… Read more here.

If you like my work and want to stay up-to-date with AI, you should definitely follow me on my other social media accounts (LinkedIn, Twitter) and subscribe to my weekly AI newsletter!

To support me:

- The best way to support me is by following me here or on Medium or subscribe to my channel on YouTube if you like the video format.

- Support my work on Patreon.

- Join our Discord community: Learn AI Together and share your projects, papers, best courses, find Kaggle teammates, and much more!

References

[1] Patashnik, Or, et al., (2021), “Styleclip: Text-driven manipulation of StyleGAN imagery.”, https://arxiv.org/abs/2103.17249

[2] Stepan Tulyakov*, Daniel Gehrig*, Stamatios Georgoulis, Julius Erbach, Mathias Gehrig, Yuanyou Li, Davide Scaramuzza, TimeLens: Event-based Video Frame Interpolation, IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, 2021, http://rpg.ifi.uzh.ch/docs/CVPR21_Gehrig.pdf

[3] Haim, N., Feinstein, B., Granot, N., Shocher, A., Bagon, S., Dekel, T., & Irani, M. (2021). Diverse Generation from a Single Video Made Possible, https://arxiv.org/abs/2109.08591.